Single Page Applications 1: Manipulating the Client Side ViewModel

This is the first of 3 introductory tutorials about the features for handling Single Page Applications offered by The Data Moving Plugin. The Data Moving Plugin is in its RC stage and will be released to the market in about one month.

Firs of all just a little bit theory….then we will move to a practical example. If you want, you may give a look to the video that shows the example working before reading about the theory:

See this Video in High Resolution on the Data Moving Plug-in Site

Typically an SPA application allows the user to perform several tasks without leaving its physical Html page. This is result may be achieved by defining several “virtual pages” inside the same physical page. During the whole lifetime of the application just an active virtual page is visible, all other pages are either hidden or completely out of the Dom because they are created dynamically by instantiating client templates. The active page interacts with just a part of the Client Side ViewModel, and only that part is kept in synchrony with the server.

One can use also different techniques to enable the user to perform several tasks without leaving the l Html physical page; in any case the client ViewModel may be partitioned into subparts that are the smallest “units” that may be sinchronized with the server independently of the remainder of the client side ViewModel. We call such elementary units Workspaces because they are the “data units” manipulated by the user while he is performing one of the several tasks allowed by the SPA.

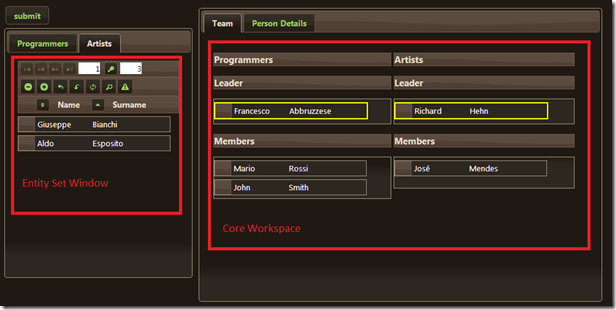

A workspace, in turn, is composed of two conceptually different sub-parts: a kind of short living data structure that is used just to carry out the current task and a set of long living data structures that are typically connected with a Database on the server side. Typically, on the client side, we don’t have all long living entities of a “given type” but just a small window of the whole Entity Set. We call Entity Set Window, the set of all long-living entities of the same type stored in the Client Side ViewModel, and we call Core Workspace the part of the Workspace that remains after removing all Entity Set Windows.

Summing up, the Client side ViewModel is composed of partially overlapping Workspaces, that are in turn composed of a Core WorkSpace and several Entity Set Windows.

In general we can’t assume that all data of the Workspace are someway visible in the user interface. In fact the task being performed currently by the user may be composed of several steps (just think to the steps of a wizard), and substantially just the data “used” in the current step are visible to the user. Accordingly, each Workspace may be further split into partially overlapping UI Units, where each UI Unit is a part of the workspace that is “visible” in the user interface at a given time.

The concept of UI Unit is very important in error handling because, while all UI Units belonging to a Workspace must be submitted simultaneously to the server, only the errors that refer to the current UI Unit can be shown to the user.

The Data Moving Plug-in, offers tools to handle properly Entity Set Windows, Core Workspaces, and for handling properly UI Units during validation error processing:

- Retrieval Managers takes care of browsing Entity Sets in the Entity Set Windows, while updatesManagers take care of keeping the Entity Set Windows synchronized with the server by processing updates performed by the users to the Entity Set Windows, and by dispatching the principal key of newly created entities returned by the server.

- Whole WorkSpace updatesMangers take care of keeping a whole Workspace synchronized with the server, by automatically issuing commands to the updatesManagers of all Entity Set Windows contained in the Workspace and by taking care “personally” of the Core Workspace.

The communication protocol between a whole Workspace updatesManager and the server includes the possibility for the server to issue “remote commands” that modify the Core Workspace on the client side. In fact, often, it is not possible for the server, to send a whole “updated” Core Workspace to the client that substitutes completely the old one, because the Core Workspace might have “links” with UI elements and with other Client Side ViewModel data, and a similar substitution would break them. - The Data Moving Plug-in provides a powerful dom element-to-dom element data binding engine that enables the user to trigger “interactions” between dom elements, and provides also a Reference Knockout binding that maps UI elements to sub-parts of the Workspace, in such a way that the user “move” such parts of the Workspace by simply moving the UI elements that represent them in an intuitive way. The dom element-to-dom element data binding engine has been already described in a previous tutorial and in a previous video, so in this tutorial we will focus mainly on the Reference binding.

- Error Bubbling, Entities in Error Filtering, and other enhancements of the standard Asp.net Mvc validation engine help in associating errors to data that are not immediately visible on the screen. Error handling will be described in the second tutorial about SPA applications: Single Page Applications 2: Validation Error Handling.

Let understand better how all this works with a simple example(the same shown in the video above).

Suppose we have a list of artists and a list of programmers, that are completely stored in the client side view model, and let suppose we would like to build a team to face a web project made of both artists and programmers. The team will have both a leader programmer and a leader artist, and not all people are entitled to cover the role of leader. Below a screen shot with an indication of the UI elements that represent data of the Core Workspace and data of the Entity Set Windows:

In the programmers tab there is another Entity Set Window containing Programmers Entities. Since we said all programmers and all artists are contained in the client ViewModel the Entity Set Windows contain the whole Entity Sets. Moreover, since both the list of all programmers and the list of all artists are paged, not all all programmers and not artists belong to the current UI Unit; this means we will have difficulties in showing possible errors related to artists and programmers that are not in the current page.

As we can see in the video new people may be added to the Team being built by simply dragging them in the “Members” area. if a new Leader is selected, the old leader is automatically moved back in the original list. People that can cover the role of leader have a yellow border, and the two Leader areas accept only people entitled to cover the Leader role. Moreover, the artists area of the team accepts just artists while the programmers area of the team accepts just programmers.

The whole team building UI logics with the constraints listed above has been obtained without writing a single line of procedural code, but by just declaring reference bindings, Drag Sources, and Drop Targets.

For instance, below the definition of the leader programmer area:

- <div id="leader_programmer"class='leader-container programmers ui-widget-content' data-bind="@bindings.Reference(m => m.ProposedTeam.LeaderProgrammer).Get()">

- @ch._withEmpty(m => m.ProposedTeam.LeaderProgrammer, ExternalContainerType.div, existingTemplate: "ProgrammerTemplate0")

- </div>

- @Html.DropTarget("#leader_programmer", new List<string> { "LeaderProgrammer" }, rolesDropOptions)

The reference binding maps the div named “leader-programmer” with the property of the Core Workspace ProposedTeam.LeaderProgrammer, while the DropTarget declaration makes it accepts Drag Sources tagged as “LeaderProgrammer”. As a consequence of this two declarations when a UI element representing a programmer entitled to cover the role of leader (ie that has the “LeaderProgrammer” tag) is dragged over this are it is “accepted”, and the data item tied to the dragged UI element with another reference binding, is moved into the knockout observable of the ProposedTeam.LeaderProgrammer property. This in turn triggers the instantiation of the programmerTemplate0 client template because of the _withEmpty instruction that is an enhancement of the knockout with binding.

The ProgrammerTemplate0 templates is the client template automatically built by the grid on the left of the page that lists all programmers. As a consequence the chosen leader programmer is rendered in the “Leader Programmer” area with the same appearance he had in the grid. Each member area works in a similar way:

- <div class="members-container programmers ui-widget-content" id ="all_programmers" data-bind="@bindings.Reference(m => m.ProposedTeam.Programmers).Get()">

- @ch._foreachEmpty(m => m.ProposedTeam.Programmers, ExternalContainerType.div, existingTemplate: "ProgrammerTemplate0")

- </div>

- @Html.DropTarget("#all_programmers", new List<string> { "Programmer" }, rolesDropOptions)

However in this case the ProposedTeam.Programmers property used in the reference binding is an observable array, so the dragged element is pushed into this array. Instead of the _withempty, we have a _foreachEmpty that is an enhancement of knockout foreach binding.

To makes everything works properly all programmers must be declared as Drag Sources tagged with “Programmer”. Moreover, all programmers entitled to cover the role of leader must have also the “LeaderProgrammer” tag:

- @Html.DragSourceItems(".programmers", ".simple-list-item", new List<string> { "Programmer" }, new DataDragOptions { DestroyOriginal = true, AppendTo = "body" })

The above declaration basically says: “define all elements marked with the class “simple-list-item” that are descendants of the dom element with class “programmers” as Drag Sources with tag “Programmer”. Now since the whole grid containing all programmers is under a div with class “programmers” and since all rows of this grid have the class “simple-list-item” all programmers are all defined as Drag sources.

The request is extended also to future elements that will be added as descendants of the element with class “programmers“, thus if we insert new elements in the grid they will be automatically declared as Drag Sources.

The “simple-list-item” class is added to each row of the grid as a part of its row definition instructions with:

- .ItemRootHtmlAttributes(new Dictionary<string, object> { { "class", "simple-list-item" } })

About the “LeaderProgrammer” tag, it must be added to all data items with the property CanBeTeamLeader set to true. Since this property may change during processing we must add it with a knockout binding attached to the CanBeTeamLeader property:

- .KoBindingsGenerator(bb => bb.CSS("LeaderProgrammer", l => l.CanBeTeamLeader)

- .Reference(m => m)

- .Get().ToString())

The KoBindingsGenerator is a method of the fluent interface of the grid row definition. It accepts a function of the type

- Func<IBindingsBuilder<U>, string> knockoutBindings

and applies the knockout bindings defined in the body of the function to all rows of the grid, by adding them to the client row template being built by the grid. We use the IBindingsBuilder interface received as argument to build a standard Knockout Css binding that adds the css class “LeaderProgrammer” whenever the property CanBeTeamLeader is true, and a Reference binding that bind each row to its associated data item. The Reference binding enables the “Dragged” programmer to “release” its referred data to the data item referred by the “Drop Traget”.

Since in the options of the DragSourceItems declaration we set DestroyOriginal to true a dropped programmer is removed from the programmers list.

When we put a new Leader Programmer in the Leader Programmer area, the old Leader programmer returns back to the programmers list because we defined the Programmers list as mirroring pool for the programmers entities (this is done in javascript):

- ko.mirroring.pool = function (obj) {

- var dis = obj["MainCompetence"];

- dis = ko.utils.unwrapObservable(dis);

- if (dis === "Artist") return TeamBuilding.ClientModel.AllArtsist.Content;

- else if (dis === "Programmer") return TeamBuilding.ClientModel.AllProgrammers.Content;

- else return null;

- };

All mirroring pools are defined by assigning a javascript function to the ko.mirroring.pool configuration variable. This function is passed all items that were removed from their places because of a Reference binding based interaction and that were put in no other place, so they “disappeared” from the client side ViewModel. This function is their last chance to find an “home”. This function analyze all properties of each item and possibly find a new “home” for it.

Moving either an artist or a programmer in the detail area assigns a reference to its associated data item to the knockout observable CurrentDetail in the client ViewModel without detaching the data item from its previous place, because in this case the DestroyOriginal option of the drop target is not set to true. This triggers the instantiation of a template that shows the data item in detail mode:

- @ch._with0(m => m.CurrentDetail,

- @<text>

- <p>

- <span class='ui-widget-header'>@item.LabelFor(m => m.Name)</span>

- :

- @item.TypedEditDisplayFor(m => m.Name, simpleClick: true)

- @item.ValidationMessageFor(m => m.Name, "*")

- </p>

- <p>

- <span class='ui-widget-header'>@item.LabelFor(m => m.Surname)</span>

- :

- @item.TypedEditDisplayFor(m => m.Surname, simpleClick: true)

- @item.ValidationMessageFor(m => m.Surname, "*")

- </p>

- <p>

- <span class='ui-widget-header'>@item.LabelFor(m => m.EMail)</span>

- :

- @item.TypedEditDisplayFor(m => m.EMail, simpleClick: true)

- @item.ValidationMessageFor(m => m.EMail, "*")

- </p>

- <p>

- <span class='ui-widget-header'>@item.LabelFor(m => m.Address)</span>

- :

- @item.TypedEditDisplayFor(m => m.Address, simpleClick: true)

- @item.ValidationMessageFor(m => m.Address, "*")

- </p>

- <p>

- <span class='ui-widget-header'>@item.LabelFor(m => m.CanBeTeamLeader)</span>

- :

- @item.CheckBoxFor(m => m.CanBeTeamLeader)

- </p>

- </text>

- , ExternalContainerType.koComment, afterRender: "mvcct.ko.detailErrors", forceHtmlRefresh: true, isDetail: true)

The _with0 instruction is a different enhancement of the knockout with binding, that accepts an in-line razor helper as client template. Among its arguments there is one named isDetail that we set to true, to inform the Data Moving Plug-in engine that the template is the detail view of a data item. This declaration triggers a synchronization behavior between the original UI of the data item and its detail view.

Having finished describing how the user can manipulate the Workspace we can move to see how server-client interaction takes place. The two Entity Set Windows of the Workspace are implemented with two grids. For a detailed description about how to “code” grids you may refer to Data Moving Plugin Controls. Here we point out just that since all data items are already on the client side we must use a local retrievalManager to execute the paging, sorting and filtering queries:

- .StartLocalRetrievalManager(m => m.AllProgrammers.Content, true, "TeamBuilding.programmersRM").EndRetrievalManager()

The first argument is the source of all items to be queried(a property of the client side ViewModel), the second argument set to true requires the execution of an initial query as soon as the page is loaded (in order to show some initial data in the grid), and the third argument is where to put the newly created retrievalManager.

The updatesManager of the two grids are both root updatesManager since our items are not children of any one-to-many relation, as in all other examples we have seen in Data Moving Plugin Controls. However, in this case they don’t communicate directly with the server, because we will define a whole WorkSpace updatesManager that will take care of collecting data from the two grids updatesManagers, handling the updates of the Core WorkSpace, communicating with the server, and dispatching the responses of the server to the two grids updatesManagers.

The definition of the programmers updatesManager is:

- .CreateUpdatesManager<TeamBuildingDestinationViewModel>("TeamBuilding.programmersUpdater", true)

- .BasicInfos(m => m.Id, m => m.ProgrammersChanges, "TeamBuilding.DestinationModel")

- .IsRoot(Url.Action("UpdateTeam"))

- .EndUpdatesManager()

It appears more complex of the updates managers we have seen in Data Moving Plugin Controls. The first call to CreateUpdatesManager contains the whole path where to store the updatesManager on the client side instead of the name of the property of the ViewModel where to store it, that’s why the second optional parameter is set to true. Moreover, the method call contains a generic type instantiation, the viewmodel we will use to submit all changes to the server:

- public class TeamBuildingDestinationViewModel

- {

- public Team ProposedTeam { get; set; }

- public OpenUpdater<Employed, Guid?> ProgrammersChanges { get; set; }

- public OpenUpdater<Employed, Guid?> ArtistsChanges { get; set; }

- }

The first property will be filled with the whole Core Workspace, while the other two properties will be filled with the programmers and artists change sets. That’s why the call to BasicInfos has two more parameters after the specification of the principal key: the first parameter is the property of the destination ViewModel where to store the programmers change set, and the third parameter is the property of the whole client ViewModel where to put the destination viewmodel before sending it to the server. The IsRoot method contains a fake url since the destination ViewModel will be posted to the server by the whole Workspace updatesManager.

The whole Workspace updatesManager must be defined in javascript since it is not tied to any specific Data Moving Plugin control:

- $('#outerContainer').attr('data-update'),

- TeamBuilding.ClientModel, "ProposedTeam", TeamBuilding.DestinationModel, "ProposedTeam",

- { updatersIndices: [TeamBuilding.programmersUpdater, TeamBuilding.artistsUpdater],

- classifyEntity: function (x) {

- if (x['Id'] && x['MainCompetence'])

- return x.MainCompetence() == "Programmer" ? 0 : 1;

- else

- return null;

- },

- ........

The first argument is the url where to submit the destination ViewModel that is extracted from an Html5 attribute of a dom element. The second argument is the whole client ViewModel and the third argument is the path where to find the Core Workspace within the whole client ViewModel. The fourth argument is the destination ViewModel, and the fifth argument is the path to the place where to store the Core Workspace within the destination ViewModel. Then we have an option argument with several properies. Here we analyze just three of them, since all others are connected to error handling that will be discussed in Single Page Applications 2: Validation Error Handling.

UpdatersIndices is an array containing all updatersManager of the Entity Set Windows of the Workspace; in our case the updatesManagers of the two grids.

classifyEntities is a function that given an entity must return the index in the previous array of its Entity Set Windows updatesManager. This function enables the whole Workspace updatesManager to process adequately all entities it find inside the Core WorkSpace.

That’s enough for everything to work properly! When the user clicks submit the update method of TeamBuilding.updater is invoked, the destination ViewModel is filled, and submitted to the server. When the server send the response the parts of the response destined to the Entity Set Windows updatesManagers will be automatically dispatched to them, and processed automatically. As a consequence, modifications to the the Core Workspace returned as remote commands by the server are applied, keys created for newly inserted entities are dispatched each to its entity, and errors associated to various data elements are dispatched next to the adequate UI elements:

- $('#submit').click(function () {

- var form = $(this).closest('form');

- TeamBuilding.ClientModel.CurrentDetail(null);

- if (form.validate().form()) {

- TeamBuilding.updater.update(form);

- }

- });

Let give a look to the action method:

- public HttpResponseMessage Post(TeamBuildingDestinationViewModel model)

- {

- if (ModelState.IsValid)

- {

- try

- {

- var builder = ApiResponseBuilder.NewResponseBuilder(model, ModelState, true, "Error in client request");

- builder.Process(m => m.ArtistsChanges, model.ProposedTeam, m => m.Id);

- builder.Process(m => m.ProgrammersChanges, model.ProposedTeam, m => m.Id);

- //business processing here

-

- var response = builder.GetResponse();

- return response.Wrap(Request);

- }

- catch (Exception ex)

- {

- ModelState.AddModelError("", ex.Message);

- return

- Request.CreateResponse(System.Net.HttpStatusCode.InternalServerError, new ApiServerErrors(ModelState));

- }

- }

- return Request.CreateResponse(System.Net.HttpStatusCode.InternalServerError, new ApiServerErrors(ModelState));

- }

This code is very similar to the code we have seen in the action methods that processes the grids updates in Data Moving Plugin Controls: we create an ApiResponseBuilder and then we call the Process method on each of the Entity Set change sets we received in the destination ViewModel. Since our principal key are Guids we dont need to specify a custom key generation function, so the indication of which property is the principal key suffices. However, now the Process methods has 3 arguments, instead of two. We used a different overload! An overload that accepts a Core WorkSpace as second argument. Why we need this further argument? Simple, because we have to process also the changes of the entities that are contained in the Core WorkSpace. In fact, a programmer or artist that we added to the Team might have been modified, or it might be a newly inserted item. The addition of the new argument enables the Process method to include also the entities contained in the Core WorkSpace in the change sets in their appropriate places if this is necessary.

Now suppose we want to modify the client side Core WorkSpace by changing all programmers names with the suffix “Changed” and by adding two more programmers to the team. We need to add adequate remote commands in the response. How to built them? Quite easy! It is enough to create a changes builder object and then to mimic these operations on it:

- var changer = builder.NewChangesBuilder(model.ProposedTeam);

-

- changer.Down(m => m.Programmers)

- .UpdateModelIenumerable(m => m, m => m.Name, (m, i) => m.Name + "Changed");

- changer.UpdateModelField(m => m.LeaderProgrammer, m => m.Name, model.ProposedTeam.LeaderProgrammer.Name + "Changed");

- changer.Down(m => m.Programmers)

- .AddToArray(m => m[0],

- new Employed()

- {

- Id = Guid.NewGuid(),

- MainCompetence = "Programmer",

- Name = "John1",

- Surname = "Smith1",

- Address = "New York, USA",

- EMail = "John@dummy.us",

- CanBeTeamLeader = true

- }, 0, true).AddToArray(m => m[0],

- new Employed()

- {

- Id = Guid.NewGuid(),

- MainCompetence = "Programmer",

- Name = "John2",

- Surname = "Smith2",

- Address = "New York, USA",

- EMail = "John@dummy.us"

- }, 0, true);

The first instruction modifies the names of the programmers that are simple members of the team(actually it just creates the remote command to do this). We go down the Programmers properties of the Core Workspace and then call the UpdateModelIEnumerable that applies a modifications to all elements of an IEnumerable. The first argument specify the IEnumerable to be modified; since we already moved into the IEnumerable it is just m => m. The second argument specifies the property of each element that must me modified and the third argument specifies how to modify it.

The second instruction modifies the LeaderProgrammer name. It is self-explanatory.

Finally, the third instruction adds two more programmers. We move down the Programmers property, and then we call AddToArray twice. The first argument of each call specifies the place in the javascript array where to place the newly added element: in our case we place it at index 0….but wait …wait wait, since the last argument of the call is set to true the enumeration starts from the bottom, so we are just queuing the new elements at the bottom of the array.

Now in order to include all “remote commands” in the response we must substitute:

- var response = builder.GetResponse();

with:

- var response = builder.GetResponse(changer.Get());

However, we have a problem, the two programmers that we added to the team might be already contained in the programmers list so we might have an entity duplication in the client side View Model. Luckily, the Data Moving Plug-in offers tools to enforce uniqueness.we may trigger the processing that enforce uniqueness of entities in onUpdateComplete callback of the whole WorkSpace updatesManager (defined in its options):

- onUpdateComplete: function (e, result, status) {

- if (!e.success) return;

- var hash = {};

- mvcct.updatesManager.utils.entitiesInWorkSpace(TeamBuilding.ClientModel.ProposedTeam, hash);

- TeamBuilding.programmersUpdater.filterObservable(hash);

- TeamBuilding.artistsUpdater.filterObservable(hash);

- },

The mvcct.updatesManager.utils.entitiesInWorkSpace method extracts all entities contained in the core workspace, and index them into an hash table. After that, each Entity Set updatesManager ensures they are not contained in the Entity Set Window it takes care of. The task is carried out efficiently because of the indexing performed by mvcct.updatesManager.utils.entitiesInWorkSpace .

That’ all for now!

Stay tuned and give a look also to all other Data Moving Plug-in introductory tutorials and videos

Francesco

Tags: Grid, DataGrid, MVC Controls Toolkit, Data Moving, Single Page Applications, Knockout, Mvc