Asp.Net Mvc 6 proposes a new option to Html Helpers: Tag Helpers. Tag Helpers are similar to Html Helpers but they use an Html tags – like syntax. Basically, they are custom tags with custom attributes that are translated into standard Html tags during server side Razor processing.

They are somehow similar to Html5 custom elements but they are processed on the server side, so they don’t need JavaScript to work properly and their code is visible to search engine robots. Html 5 elements are not fully supported by all main browsers, but they are somehow simulated on all browsers by several JavaScript frameworks, like, for instance, Knockout.js.

Thus, one might plan to build TagHelper based Mvc controls that create their final Html either on the server side or on the client side with the help of a JavaScript framework that supports custom elements. More specifically, the same Razor View might generate either the final Html or some custom elements based code to be interpreted on the client side by a JavaScript framework, depending on some settings specified either in the View itself, or in the controller, or in some configuration file. Both server and client side generation have their vantages and disadvantages(among them server controls are visible to search engines, but client controls are more flexible), so the possibility to change between them without changing the Razor code appears very interesting.

This post is not a basic tutorial on Tag Helpers, but a tutorial on how to implement advanced template based controls, like grids, menus, or tree-views with TagHelpers. An introduction to TagHelpers is here; please read it if you are new to custom Tag Helpers implementation.

This tutorial assume you have:

- A Visual Studio 2015 based development environment. If you have not installed VS 2015, yet, please refer to my previous post on how to build your VS 2015 based development environment.

- Asp.Net 5 beta8 installed. Instructions on how to move to beta8 may be found here.

Template Based Controls

Complex controls like TreeViews and Grids use templates to specify how each node/row is rendered. Usually, they have also default templates, so the developer needs to specify templates just for the “pieces” that need custom rendering. For instance, in the case of a grid a developer wishing to use the default row template might need to specify templates just for a few columns that need custom rendering. Controls may allow several more custom templates, such as a custom pager template, a custom header template, a footer template and so on.

In this tutorial I’ll show just the basic technique for implementing templates with TagHelpers. For this purpose we define a simple <iterate>…</iterate> TagHelper that instantiates a template on all elements of an IEnumerable. Moreover, all inputs field created in the output Html will have the right names for the Model Binder to read back the IEnumerable when the form containing the <iterate> tag is submitted (see here if you are new to model binding to an IEnumerable).

The Test Project

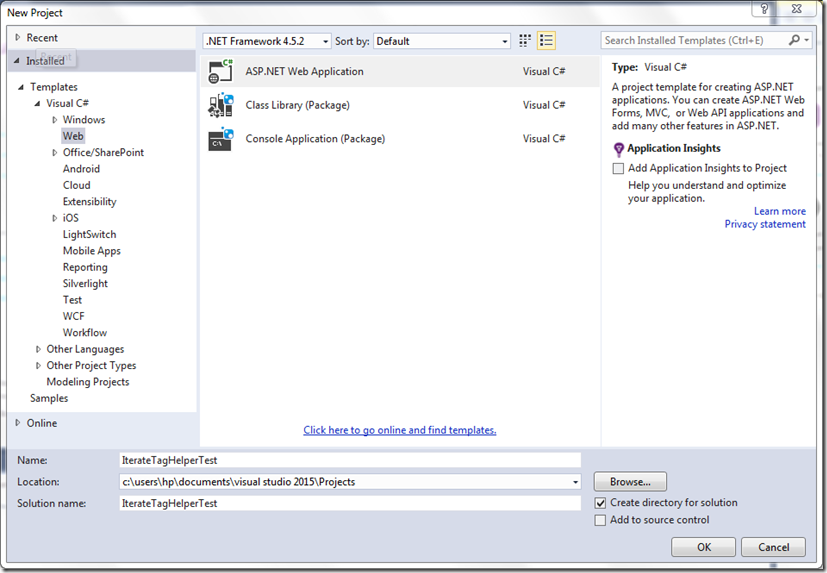

Open VS 2015 and select: File –> New –> Project…

Now select “ASP.NET Web Application” and call the project “IterateTagHelperTest” (please use exactly the same name, otherwise you will not be able to use my code “as it is” because of namespace mismatches).

Now choose “Web Application” under “ASP.NET Preview Templates”, and click “OK” without changing anything else.

We will test our new TagHelper with a new View handled by the HomeController.

We need a ViewModel, so as a first step go to the “ViewModels” folder and add a child folder named “Home” for all HomeController ViewModels.

Then add a new class called “TagTestViewModel.cs” to this folder.

Finally, delete the code created by the scaffolder and add the following code:

- using System;

- using System.Collections.Generic;

- using System.Linq;

- using System.Threading.Tasks;

-

- namespace IterateTagHelperTest.ViewModels.Home

- {

- public class Keyword

- {

- public string Value { get; set; }

- public Keyword(string value)

- {

- Value = value;

- }

- public Keyword()

- {

-

- }

-

- }

- public class TagTestViewModel

- {

- public IEnumerable<Keyword> Keywords { get; set; }

- }

- }

It is a simple ViewModel containing an IEnumerable to test our iterate TagHelper.

Now move to the HomeController and add the following using:

- using IterateTagHelperTest.ViewModels.Home;

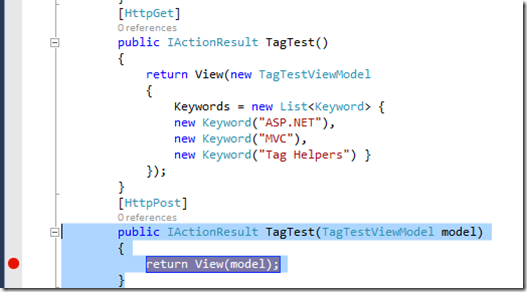

Then add a Get and a Post action methods to test our TagHelper (without modifying all other action methods):

- [HttpGet]

- public IActionResult TagTest()

- {

- return View(new TagTestViewModel

- {

- Keywords = new List<Keyword> {

- new Keyword("ASP.NET"),

- new Keyword("MVC"),

- new Keyword("Tag Helpers") }

- });

- }

- [HttpPost]

- public IActionResult TagTest(TagTestViewModel model)

- {

- return View(model);

- }

Now go to the Views/Home folder and add a new View for the newly created action methods. Call it “TagTest” to match the action methods name.

Remove the default code and substitute it with:

- @model IterateTagHelperTest.ViewModels.Home.TagTestViewModel

- @{

- ViewBag.Title = "Tag Test";

- }

-

- <h2>@ViewBag.Title</h2>

We will insert the remainder of the code after the implementation of our TagHelper. Now we need just a title to run the application and test that everything we have done works properly.

Before testing the application we need a link to reach the newly defined View. Open the Views/Shared/_Layout.cshtml default layout page and locate the Main Menu:

- <ul class="nav navbar-nav">

- <li><a asp-controller="Home" asp-action="Index">Home</a></li>

- <li><a asp-controller="Home" asp-action="About">About</a></li>

- <li><a asp-controller="Home" asp-action="Contact">Contact</a></li>

- </ul>

And add a new menu item for the newly created page:

- <ul class="nav navbar-nav">

- <li><a asp-controller="Home" asp-action="Index">Home</a></li>

- <li><a asp-controller="Home" asp-action="About">About</a></li>

- <li><a asp-controller="Home" asp-action="Contact">Contact</a></li>

- <li><a asp-controller="Home" asp-action="TagTest">Test</a></li>

- </ul>

Now run the application and click on the “Test” top menu item: you should go to our newly created test page with title “Tag Test”.

Now that our test environment is ready we may move to the TagHelper implementation!

Handling Template Current Scope

A template is the whole Razor code enclosed within a template-definition TagHelper. For instance, in the case of a grid we might have a <column-template asp-for=”….”> </column-template > TagHelper that encloses custom column templates, a <header-template > </header-template > that encloses custom header templates, and so on. Since our simple <iteration> TagHelper, includes a single template we will take the whole <iteration> TagHelper content as template. In the case, of more complex controls the main control TagHelper usually includes several children template-definition TagHelpers.

According to our previous definition, each template may contain both: tags, Razor instructions and variable definitions. Since the same template is typically called several time we need a way to ensure that the scope of all Razor variables is limited to the template itself. Moreover, all asp-for attributes inside the template must not refer to the Razor View ViewModel but to the current model the template is being instantiated on.

Something like:

- <iterate asp-for="Keywords">

- @using (var s = Html.NextScope<Keyword>())

- {

- var m = s.M;

- <div class="form-group">

- <div class="col-md-10">

- <input asp-for="@m().Value" class="form-control" />

- </div>

- </div>

- }

- </iterate>

should do the job. Where Html.NextScope<Keyword>() takes the the current scope passed by the father <iterate> TagHelper put it in a variable, and makes it the active scope. s.M() takes the current model from the current scope. s.M must be a function to avoid that m is included in the input names(Keyword[i].m.Value, instead of the correct Keyword[i].Value).

When the template execution is terminated the scope object is disposed, and its dispose method removes it form the top of the scopes stack, and re-activate the previously active scope, if any(we need a stack, because templates might nest).

The <iteration> TagHelper takes the list of Keywords thanks to its asp-for=”Keywords” attribute, and call the template on each object of the list:

- foreach (var x in model)

- {

- TemplateHelper.DeclareScope(ViewContext.ViewData, x, string.Format("{0}[{1}]", fullName, i++));

- sb.Append((await context.GetChildContentAsync(false)).GetContent());

-

- }

The TemplateHelper.DeclareScope helper basically put all scope information into an item of the Razor View ViewData dictionary, where the previously discussed Html.NextScope method can take it. The current scope contains the current model, and the HtmlPrefix to be added to all names. In our case Keywords[0]…Keywords[n]. This way all input controls will have names like “Keywords[i].Value”, instead of simply “Value”. This is necessary for model binding to work properly when the form is submitted.

When the scope is activated by Html.NextScope the HtmlPrefix is temporarily put into the TemplateInfo.HtmlFieldPrefix field of the Razor View ViewData dictionary. This is enough to ensure, it automatically prefixes all names. When the current scope is deactivated the previous TemplateInfo.HtmlFieldPrefix value is restored.

In the next two sections i give all implementation details of both the scope stack , and of the <iterate> TagHelper.

Implementing the Scope Stack

We insert the Scope Stack code in a new folder. Go to the Web Project root and add a new folder called HtmlHelpers.

We start with the definition of an interface containing all scope informations. Under the previously created HtmlHelpers folder add a new interface called ITemplateScope.cs. Then substitute the scaffolded code with:

- using System;

- using System.Collections.Generic;

- using System.Linq;

- using System.Threading.Tasks;

- using Microsoft.AspNet.Mvc.ViewFeatures;

-

- namespace IterateTagHelperTest.HtmlHelpers

- {

- public interface ITemplateScope : IDisposable

- {

- string Prefix { get; set; }

- ITemplateScope Father { get; set; }

- }

- public interface ITemplateScope<T> : ITemplateScope

- {

- Func<T> M { get; set; }

- }

- }

The interface contains a generic that will be instantiated with the template model type. The model is returned by a function in order to get the right names inside the template (this way all names start from the first property after the function, see previous section). All members that do not depend from the generic are grouped into the not-generic interface ITemplateScope, the final interface inherit from. Moreover, ITemplateScope inherit from IDisposable since it must be used within a using statement. Together with the model the scope contains the current HtmlPrefix(see previous section), and a pointer to the father scope(this way, a template has access also to the father template model in case of nested templates).

Now we are ready to implement the Html.NextScope<T> and TemplateHelper.DeclareScope methods. The whole implementation is based on an active scopes stack whose stack items are instances of a class that is declared private inside the TemplateHelper static class:

private class templateActivation

{

public string HtmlPrefix { get; set; }

public object Model { get; set; }

public ITemplateScope Scope { get; set; }

}

The class contains the father HtmlPrefix to restore when the scope is deactivated, a not-generic pointer to the scope interface, and the scope model as an object since the model is not accessible through the ITemplateScope interface. The same class is used to pass scope information among method calls.

The DeclareScope method store new scope information in the ViewData dictionary

private const string lastActivation = "_template_stack_";

public static void DeclareScope(ViewDataDictionary vd, object model, string newHtmlPrefix)

{

var a = new templateActivation

{

Model = model,

HtmlPrefix = newHtmlPrefix

};

vd[lastActivation] = a;

}

Then NextScope<T> takes it to activate a new scope:

private const string lastActivation = "_template_stack_";

private const string externalActivation = "_template_external_";

public static ITemplateScope<T> NextScope<T>(this IHtmlHelper helper)

{

var activation = helper.ViewContext.ViewData[lastActivation] as templateActivation;

if (activation != null)

{

helper.ViewContext.ViewData[lastActivation] = null;

var stack = helper.ViewContext.ViewData[templateStack] as Stack<templateActivation>;

ITemplateScope father = null;

if (stack != null && stack.Count > 0)

{

father = stack.Peek().Scope;

}

if (father == null)

{

father = helper.ViewContext.ViewData[externalActivation] as ITemplateScope;

}

return new TemplateScope<T>(helper.ViewContext.ViewData, activation)

{

M = () => (T)(activation.Model),

Prefix = activation.HtmlPrefix,

Father = father

};

}

else return null;

}

If a newly created scope is found a new instance of the TemplateScope<T> class that implements the ITemplateScope<T> class is created. The TemplateScope<T> class is declared as private inside the TemplateHelper class. The stack is accessed just to find the father ITemplateScope. If the stack is empty the method try to get the father scope from another ViewData entry, that might be used to keep activation information across partial view calls(not implemented in this example).

The stack push is handled in the TemplateScope<T> constructor:

- public TemplateScope(ViewDataDictionary vd, templateActivation a)

- {

- this.vd = vd;

- a.Scope = this;

- PushTemplate(a);

-

- }

While the stack pop is handled by the TemplateScope<T> Dispose method:

- public void Dispose()

- {

- PopTemplate();

- }

In order to add the whole implementation described above to your project, go to the previously created HtmlHelpers folder and add a class called TemplateHelper.cs. Then replace the scaffolded code by the code below:

- using System;

- using System.Collections.Generic;

- using System.Linq;

- using System.Threading.Tasks;

- using Microsoft.AspNet.Mvc.Rendering;

- using Microsoft.AspNet.Mvc.ViewFeatures;

-

- namespace IterateTagHelperTest.HtmlHelpers

- {

-

- public static class TemplateHelper

- {

- private const string templateStack = "_template_stack_";

- private const string lastActivation = "_template_stack_";

- private const string externalActivation = "_template_external_";

- private class templateActivation

- {

- public string HtmlPrefix { get; set; }

- public object Model { get; set; }

- public ITemplateScope Scope { get; set; }

- }

- private class TemplateScope<T> : ITemplateScope<T>

- {

-

-

- public TemplateScope(ViewDataDictionary vd, templateActivation a)

- {

- this.vd = vd;

- a.Scope = this;

- PushTemplate(a);

-

- }

- private ViewDataDictionary vd;

- public string Prefix { get; set; }

- public Func<T> M { get; set; }

- public ITemplateScope Father { get; set; }

- public void Dispose()

- {

- PopTemplate();

- }

- private void PushTemplate(templateActivation a)

- {

- var activation = new templateActivation

- {

- HtmlPrefix = vd.TemplateInfo.HtmlFieldPrefix,

- Model = a.Model,

- Scope = a.Scope

- };

- var stack = vd[templateStack] as Stack<templateActivation> ?? new Stack<templateActivation>();

- stack.Push(activation);

- vd[templateStack] = stack;

- vd.TemplateInfo.HtmlFieldPrefix = a.HtmlPrefix;

- }

- private void PopTemplate()

- {

- var stack = vd[templateStack] as Stack<templateActivation>;

- if (stack != null && stack.Count > 0)

- {

- vd.TemplateInfo.HtmlFieldPrefix = stack.Pop().HtmlPrefix;

- }

- }

- }

- public static void DeclareScope(ViewDataDictionary vd, object model, string newHtmlPrefix)

- {

- var a = new templateActivation

- {

- Model = model,

- HtmlPrefix = newHtmlPrefix

- };

- vd[lastActivation] = a;

- }

- public static ITemplateScope<T> NextScope<T>(this IHtmlHelper helper)

- {

- var activation = helper.ViewContext.ViewData[lastActivation] as templateActivation;

- if (activation != null)

- {

- helper.ViewContext.ViewData[lastActivation] = null;

- var stack = helper.ViewContext.ViewData[templateStack] as Stack<templateActivation>;

- ITemplateScope father = null;

- if (stack != null && stack.Count > 0)

- {

- father = stack.Peek().Scope;

- }

- if (father == null)

- {

- father = helper.ViewContext.ViewData[externalActivation] as ITemplateScope;

- }

- return new TemplateScope<T>(helper.ViewContext.ViewData, activation)

- {

- M = () => (T)(activation.Model),

- Prefix = activation.HtmlPrefix,

- Father = father

- };

- }

- else return null;

- }

- }

- }

Now we are ready to move to the TagHelper implementation.

Implementing the <iteration> TagHelper

Go to the root of the web Project and add a folder called TagHelpers, then add a new class called IterateTagHelper.cs to this folder. Substitute the scaffolded code with the code below:

- using Microsoft.AspNet.Mvc.Rendering;

- using Microsoft.AspNet.Razor.Runtime.TagHelpers;

- using System.Text;

- using Microsoft.AspNet.Mvc.ViewFeatures;

- using System.Threading.Tasks;

- using System.Collections;

- using IterateTagHelperTest.HtmlHelpers;

-

-

- namespace IterateTagHelperTest.TagHelpers

- {

- [HtmlTargetElement("iterate", Attributes = ForAttributeName)]

- public class IterateTagHelper : TagHelper

- {

- private const string ForAttributeName = "asp-for";

- [HtmlAttributeNotBound]

- [ViewContext]

- public ViewContext ViewContext { get; set; }

- [HtmlAttributeName(ForAttributeName)]

- public ModelExpression For { get; set; }

-

- public override async Task ProcessAsync(TagHelperContext context, TagHelperOutput output)

- {

- var name = For.Name;

- var fullName = ViewContext.ViewData.TemplateInfo.GetFullHtmlFieldName(name);

- IEnumerable model = For.Model as IEnumerable;

- output.TagName = string.Empty;

- StringBuilder sb = new StringBuilder();

- if (model != null)

- {

- int i = 0;

- foreach (var x in model)

- {

- TemplateHelper.DeclareScope(ViewContext.ViewData, x, string.Format("{0}[{1}]", fullName, i++));

- sb.Append((await context.GetChildContentAsync(false)).GetContent());

-

- }

- }

- output.Content.SetContentEncoded(sb.ToString());

-

- }

-

- }

- }

Tag attributes are mapped to properties with the help of the HtmlAttributeName attribute, and are automatically populated when the IterateTagHelper instance is created. The asp-for attribute that in our case selects the collection to iterate on is mapped into the For property whose type is ModelExpression. As a result of the match a ModelExpression instance containing the collection, and its name is created.

The ViewContext property is not mapped to any tag attribute since it is decorated with the HtmlAttributeNotBound attribute. Instead it is populated with the Razor View ViewContext since it is decorated with the ViewContext attribute. We need the View ViewContext to extract the View ViewData dictionary.

The remainder of the code is straightforward:

- We get the name of the asp-for bound property.

- We add a possible HtmlPrefix to the above name by calling the GetFullHtmlFieldName method. We need it to pass the right HtmlPrefix to the scope of each IEnumerable element. Without the right prefix the collection can’t be bound by the receiving Action Method when the form is submitted.

- We extract the collection and cast it to the right type.

- Since we don’t want to enclose all template instantiations within a container we set the output.TagName to the empty string.

- We create a StringBuilder to build our content.

- For each IEnumerable element we create a new scope with the right HtmlPrefix, and the we get the element HTML by calling GetChildContentAsync. We pass a false argument to avoid that the method might return the previously cached string(otherwise we would obtain N copies of HTML of the first collection element).

- Finally we set the string created by chaining all children HTML as a tag content by calling the SetContentEncoded method. The Encoded postfix avoids that the string be HTML encoded.

Importing HtmlHelper and TagHelper

Now we need to import our Html Helper and our TagHelper. We may import them either locally in each View using them or globally by adding the import instructions to the Views/_ViewImports.cshtml View. Before the addition the Views/_ViewImports.cshtml file should look like this:

- @using IterateTagHelperTest

- @using IterateTagHelperTest.Models

- @using IterateTagHelperTest.ViewModels.Account

- @using IterateTagHelperTest.ViewModels.Manage

- @using Microsoft.AspNet.Identity

- @addTagHelper "*, Microsoft.AspNet.Mvc.TagHelpers"

Add:

@using IterateTagHelperTest.HtmlHelpers

@addTagHelper "*, IterateTagHelperTest"

To get:

@using IterateTagHelperTest

@using IterateTagHelperTest.Models

@using IterateTagHelperTest.ViewModels.Account

@using IterateTagHelperTest.ViewModels.Manage

@using Microsoft.AspNet.Identity

@addTagHelper "*, Microsoft.AspNet.Mvc.TagHelpers"

@using IterateTagHelperTest.HtmlHelpers

@addTagHelper "*, IterateTagHelperTest"

The @addTagHelper "*, IterateTagHelperTest" instruction imports all TagHelpers contained in the Web Site dll(whose name is IterateTagHelperTest).

Testing our TagHelper

No we may finally test our TagHelper. Opens the previously defined Views/Home/TagTest.cshtml View and replace its content with the content below:

@model IterateTagHelperTest.ViewModels.Home.TagTestViewModel

@using IterateTagHelperTest.ViewModels.Home

@{

ViewBag.Title = "Tag Test";

}

<h2>@ViewBag.Title</h2>

<div>

<form asp-controller="Home" asp-action="TagTest" method="post" class="form-horizontal" role="form">

<iterate asp-for="Keywords">

@using (var s = Html.NextScope<Keyword>())

{

var m = s.M;

<div class="form-group">

<div class="col-md-10">

<input asp-for="@m().Value" class="form-control" />

</div>

</div>

}

</iterate>

<div class="form-group">

<div class="col-md-offset-2 col-md-10">

<button type="submit" class="btn btn-default">Submit</button>

</div>

</div>

</form>

</div>

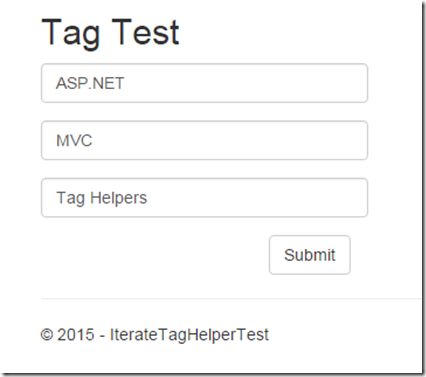

Now run the application, and click the “Test” main menu item to go our test page. You should see something like:

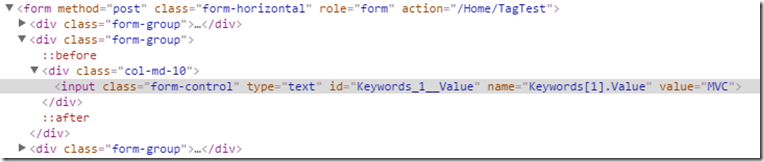

The TagHelper actually instantiates the template all over the Keywords IEnumerable! The input field names appears correct:

Let put a breakpoint in the receiving Action Method of the HomeController to verify that the IEnumerable is bound properly:

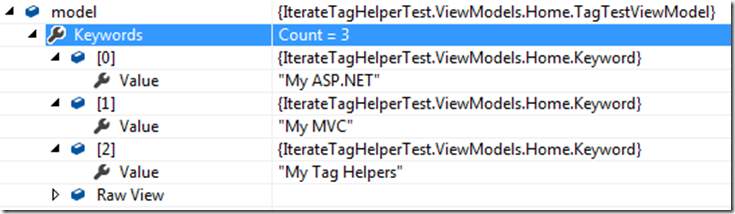

Now let modify a little our Keywords and let submit the form. When the breakpoint is hit let inspect the received model:

That’s all for now! The whole project may be downloaded here.

Comments are disabled to avoid spamming, please use my contact form to send feedback:

Stay Tuned!

Francesco

Tags: TagHelper, Html, MVC Controls, Asp.Net 5